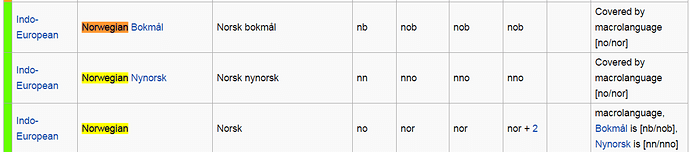

Welcome to the community Eirik. I am the maintainer for Spanish. I live in Norway and adding Norwegian (Bokmål) to LT is in my wishlist. So please count on my help if you decide to go forward.

LT began as a set of rules written in XML format supported by Java rules when XML was not enough. As Daniel pointed out, in order to get familiar with LT the very first task is looking at the rules of a language that is accesible for you. In your case, I think English will do and from what I know, German shares a lot of grammar with Norwegian so it will be helpful.

To my view, the very main requirement is having a good abstraction capability, and proficiency using regular expressions. This will allow you to design good rules, broad and general instead of a large set of brute-force rules. For instance, in order to detect concordances mismatch like

boken er fint

boka er fint

Instead of two separate rules, you can write only one with

bok(a|en) er fint

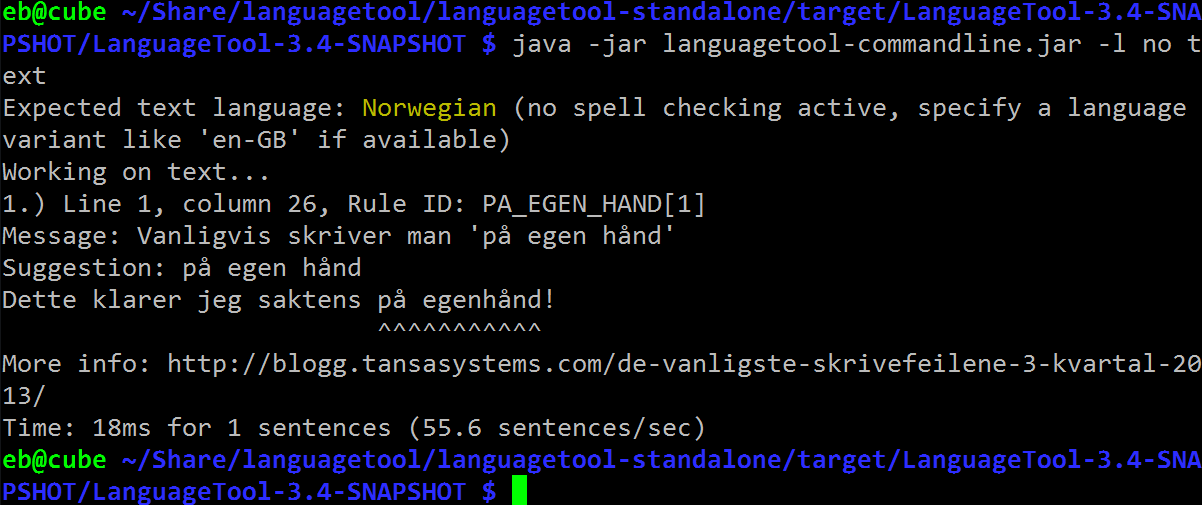

Grammar analysis is done in several stages:

- Segmentation

- Tagging

- Disambiguation

- Rule evaluation

- Suggestion synthesis

For this stages, only segmentation and rule evaluation are mandatory The rest are supported but need to be implemented. There are currently more stages, because there are statistical checks using n-gram data and confusion sets, but I am not yet familiar with them and documentation about the process in the wiki is getting a little old.

Segmentation divides the text into sentences and the sentences into tokens.

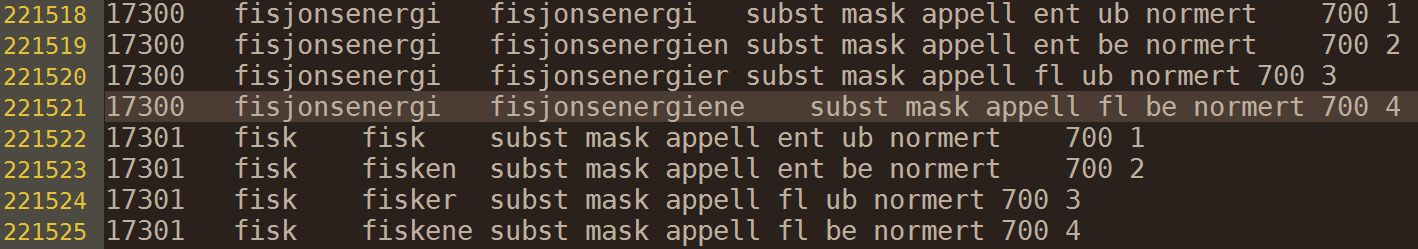

Tagging assigns all possible Part of Speech (PoS) tags to all tokens.

Disambiguation corrects PoS for the tokens via disambiguation rules.

Rule evaluation is where sentences are matched with the rules.

Suggestion synthesis is where the proposed correction generates an alternative from PoS, i.e. from the singular the system come up with a plural starting from the same lemma.

This introduction is just for letting you know that LT is feature rich and, even thogh it may seem intimidating, you can start from a simple brute-force rule matcher to a full-featured intelligent grammar proofer.

There is a caveat, however. If you are as lazy as I am, you will learn that by introducing tagging your ruleset (for rule evaluation stage) simplifies a lot. By disambiguating, there is also a noticeable simplification. The more complete tagger dictionary, the simpler you get the ruleset. This means that you should seriously consider starting ASAP with a tagger dictionary and implementing the disambiguator before the ruleset grows beyond control and technical debt accumulates.

That is an architectural decision to make if you are to maintain Norwegian.

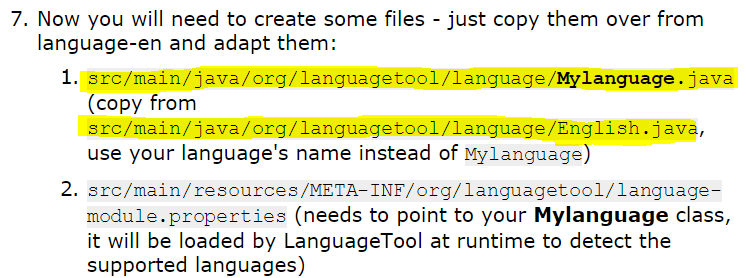

About tooling, I find the rule editor a great entry point for newcomers. Unfortunately, this is not available for unsupported languages, so I suggest we add initial support for Norwegian, maybe on a separate branch, or incubator, if possible.