Hi,

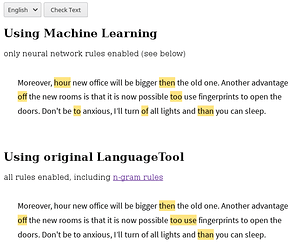

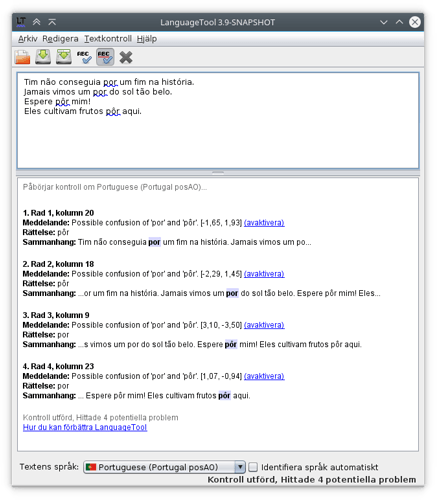

as part of my project work at university, I looked into detecting confused words (e. g. to/too, than/then) using the word2vec model by Mikolov et al and neural networks (which get 5-grams as input). I’ve integrated some neural network word confusion rules into LanguageTool (source code) and a small online demo for German and English is available here. (The forms are sometimes buggy and in case my LanguageTool server crashes I have to restart it by hand.)

So in which way are the neural network based rules better than the existing 3-gram rules?

-

Smaller files: The zipped n-gram data for German has a size of 1.6 GB, whereas the language model for the neural network has a size of 83 MiB (+ 12 KB for each confusion pair).

-

The n-gram rules can only detect an error if the correct 3-gram is part of the corpus, but the neural network can also detect errors if a similar 5-gram was part of the training corpus.

And what are the disadvantages?

-

As always with neural networks: You cannot really say what they actually learned, what they “think”. We can only see that it works.

-

Compared with the 3-gram rules, recall is worse for the same level of precision. That’s why the rules are calibrated such that precision is > 99 % (> 99.9 % would be better for everyday use, though).